Edward Fish, PhD

👋 I’m Ed, a Senior Research Fellow at the Centre for Vision, Speech and Signal Processing (CVSSP) at the University of Surrey, where I work on computer vision for accessibility with Professor Richard Bowden in the Cognitive Vision Group.

I recently completed my PhD in Efficient Multi-Modal Video Understanding, supervised by Dr. Andrew Gilbert. Currently I am focussed on research in Automated Sign Language Translation as part of the EPSRC project Sign GPT alongside work on AI for efficient Sign Language Annotation funded by Google.org.

Prior to my PhD I worked for a number of social enterprises focussed on improving access to careers in computing and the creative industries. I’m always happy to help review CV’s, university/college applications, and provide advice where I can. Simply drop me an email or a message on LinkedIn.

You can find my publications and CV here.

📢 News

- November 2025: Gave a talk at the BMVA Symposium on Multi-Modal Large Language Models at the British Computer Society London. You can watch the talk here

- September 2025: 🎉 Geo-Sign: Hyperbolic Contrastive Regularisation for Geometrically Aware Sign Language Translation” is accepted to NeurIPS 2025!

- August 2025: I obtained my BSL 101-103 certification. Now studying towards level 2.

- August 2025: I’m chairing the BMVA one day symposium on AI for Sign Language Translation, Production, and Linguistics on December 10th. Register to present or attend here We will announce keynotes soon.

- July 2025: 🎉 Our paper, “VALLR: Visual ASR Language Model for Lip Reading”, is accepted to ICCV 2025!

- July 2025: 🎉 Our paper, “Prompt Learning with Optimal Transport for Few-Shot Temporal Action Localization”, is accepted to ICCV workshop - CLVL 2025.

- June 2025: I’m at CVPR 2025 co-chairing the Sign Language Recognition, Recognition, Translation, and Production (SLRTP) workshop. You can read our paper on the competition we ran here

- May 2025: Code and paper for “Geo-Sign: Hyperbolic Contrastive Regularisation for Geometrically Aware Sign Language Translation” is available online here (Under review 🤞)

Current PhD Students

Marshall Thomas: “Integrating Non-Manual Features for Robust Sign Language Translation” (Co-Supervisor with Prof. Richard Bowden)

Karahan Şahin: “Unified representations for Sign Language Translation and Production” (Co-Supervisor with Prof. Richard Bowden)

📝 2025 Publications

This is a selection of my recent papers from this year. For a complete list, please see my publications page.

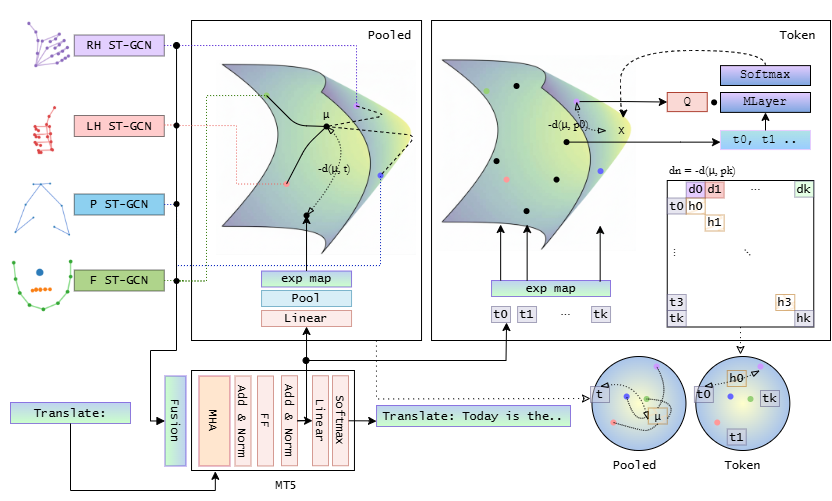

Geo-Sign: Hyperbolic Contrastive Regularisation for Geometrically-Aware Sign-Language Translation

Our pose-only method beats state-of-the-art pixel approaches by injecting hierarchical structure into a language model using hyperbolic geometry via a novel regulariser.

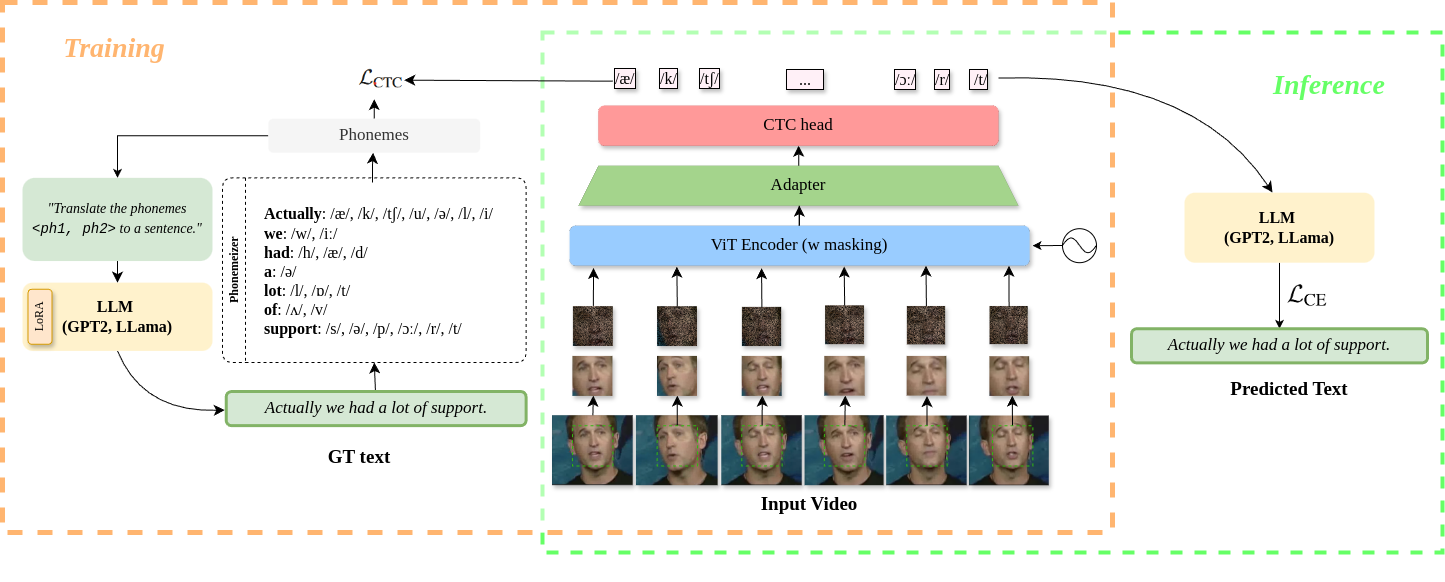

VALLR: Visual ASR Language Model for Lip Reading

Achieves state-of-the-art results in lip reading with 99% less training data by deconstructing the problem into phoneme recognition and sentence reconstruction.

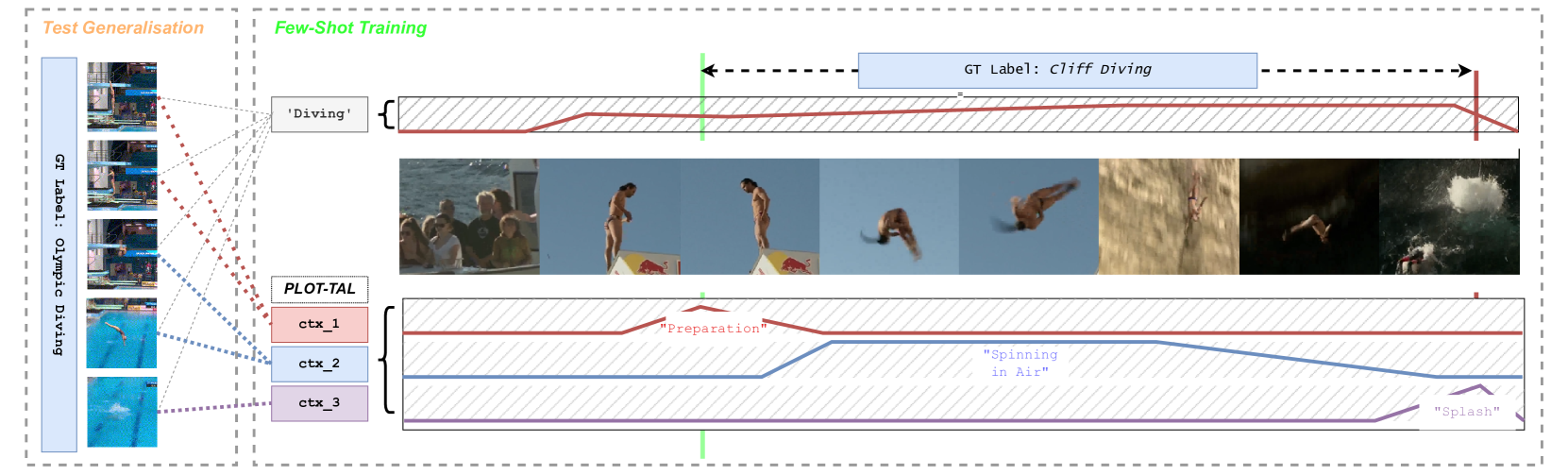

PLOT TAL: Prompt Learning with Optimal Transport for Few Shot Temporal Action Localization

A novel approach for few-shot temporal action localization, leveraging prompt learning and optimal transport.